2025-10-30 AI Daily: Cursor 2.0 and Self-Developed Model Composer Directly Confront Cognition, Sora Limited-Time Invitation Code Free, Can AI Even 'Introspect'?

On October 29, 2025 (why October 29? Because daily reports usually cover yesterday’s news), the AI field witnessed an astonishing explosion. AI code editor Cursor launched version 2.0 and its self-developed model, while Cognition AI responded with its ultra-fast Agent model. At the same time, OpenAI’s Sora opened invitation-free registration in some regions, Google presented multiple gifts to developers, and Anthropic’s research further revealed that AI models may possess preliminary ‘introspection’ capabilities.

Today’s AI world is truly bustling! From major upgrades to developer tools, to the full opening of video generation models, and then to astonishing research on AI self-awareness, major giants and startups are accelerating their pace, and the competitive atmosphere is becoming increasingly intense.

Let’s quickly review today’s unmissable major news.

More Than Just an Editor, Cursor 2.0 Creates a New Paradigm for AI Development

AI-first code editor Cursor officially released its landmark Cursor 2.0 version today, bringing a brand new Agent interface and a surprising “killer feature”: their first self-developed agent coding model—Composer.

According to the official blog, Composer is a cutting-edge model whose biggest highlight is speed—four times faster than models of comparable intelligence. This means developers can get more immediate responses, greatly improving work efficiency.

In addition to its powerful core, Cursor 2.0’s interface has also been completely revamped. The new “Multi-Agents” interface allows users to run up to eight Agents in parallel under a single prompt. This feature uses git worktrees or remote machines to avoid file conflicts, allowing each Agent to work in an independent copy of the codebase. Imagine being able to have multiple AI assistants handle different tasks simultaneously, or solve the same problem with different models, and then choose the best solution—this is simply a developer’s dream.

In addition, the changelog also lists several improvements, including:

- Improved code review: Changes across multiple files are clear at a glance.

- Sandbox Terminal (GA): Enabled by default on macOS, enhancing security.

- Team commands and voice mode: Making team collaboration and human-computer interaction smoother.

King of Speed Arrives! Cognition Releases SWE-1.5 Ultra-Fast Agent Model

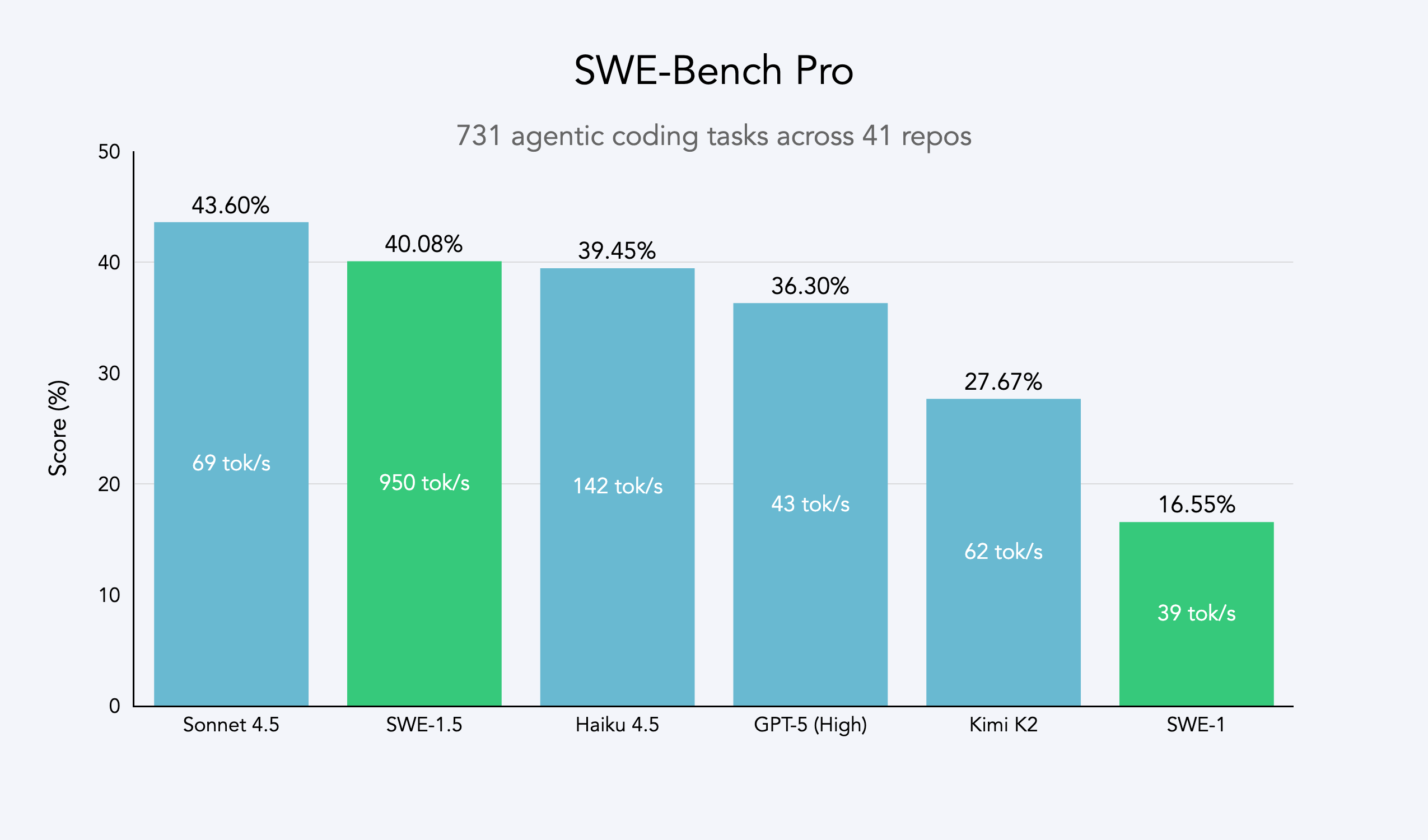

Just as Cursor launched Composer, Cognition AI, famous for its AI engineer Devin, seemed to sense the challenge and quickly released their latest software engineering model SWE-1.5.

If Cursor’s Composer emphasizes a comprehensive experience, then SWE-1.5’s core selling point is one word: speed.

Cognition partnered with Cerebras to make SWE-1.5 run at an astonishing 950 tok/s, which is 6 times faster than Haiku 4.5 and 13 times faster than Sonnet 4.5. This speed allows many tasks that previously took tens of seconds to be completed in 5 seconds. The competition for AI code assistants has clearly entered the “Fast and Furious” stage. SWE-1.5 is currently live on the Windsurf platform.

Sora Video Generation Free Play! US, Canada, Japan, and Korea Limited-Time Invitation Code Free

For content creators, today’s biggest good news is that OpenAI’s Sora has finally lifted its restrictions.

OpenAI announced that its highly anticipated text-to-video application, Sora, will temporarily waive the invitation code requirement. Users in the United States, Canada, Japan, and South Korea can now download the iOS app or visit sora.com without waiting, and log in with their OpenAI account to experience it.

This “limited-time” offer is an important step for Sora from closed beta to wider application, aiming to test server load and collect more user feedback. If you are in the above regions, don’t miss this opportunity to be among the first to experience the top AI video generation tool!

Pro User Benefits: OpenAI Pulse Feature Lands on Web Version

In addition to the good news about Sora, OpenAI also brought updates for its paid Pro users. The Pulse feature is now available on the web, allowing Pro users to enjoy more comprehensive services in a desktop environment.

Your Asynchronous Code Partner: Google Launches Jules Extension for Gemini CLI

Google also brought a great gift to the developer community today, releasing Jules—an extension designed for the Gemini CLI.

Jules is like an “autonomous partner” that can handle tasks asynchronously. Developers can delegate time-consuming tasks (such as fixing bugs, making changes in a new branch) to Jules to process in the background through simple commands in the Gemini CLI. This allows developers to continue focusing on their current core work without interrupting their workflow. You can find this extension on GitHub.

Developer’s Blessing! Google Gemini 2.5 Model Cache Discount Soars to 90%

The good news Google brings to developers doesn’t stop there. According to official news, Google has significantly increased the implicit cache discount for the Gemini 2.5 model, from the original 75% to 90%.

This means that for repeated or similar API requests, developers’ costs will be significantly reduced. This policy will undoubtedly encourage developers to utilize the model more efficiently and lower the threshold for application development.

Set Your Own Security Rules! OpenAI Releases gpt-oss-safeguard Open Source Model

In the field of AI security, OpenAI also took an important step, releasing an open-source security model named gpt-oss-safeguard.

The biggest feature of this series of models (available in 120b and 20b sizes) is their flexibility. Traditional security classifiers are usually based on predefined rules, while gpt-oss-safeguard allows developers to provide their own security policies at runtime. The model will use its reasoning capabilities to interpret and apply these custom policies.

This “self-provided policy” design allows developers to define security red lines according to the specific scenarios of their own applications (for example, the security requirements for game forums and financial applications are completely different), and the “chain of thought” provided by the model also allows developers to understand its judgment basis, achieving higher customization and transparency.

What is Claude Thinking? Anthropic Research Reveals AI’s Limited ‘Introspection Capability’

Perhaps the most thought-provoking news today comes from a research paper on the introspection capabilities of large language models published by Anthropic.

Have you ever wondered if an AI’s answer is true or made up when you ask it “what are you thinking”? Anthropic’s research attempts to scientifically answer this question. They used an experimental technique called “concept injection” to implant specific “thoughts” (in the form of neural activity patterns) into the Claude model, and then observed whether the model could detect and report these “foreign thoughts.”

The research found that top models like Claude Opus 4.1 can indeed perceive injected concepts in some cases and report abnormalities in their internal cognitive processes. Although this ability is still very unstable (with a success rate of about 20%), it provides scientific evidence for the first time that AI may possess some degree of ability to monitor and report its own internal state.

This finding has extremely far-reaching implications for AI transparency, reliability, and even future human-computer interaction patterns. Although we are still far from truly understanding the “inner world” of AI, this research undoubtedly opens a door to a new world.