PartCrafter: From a Single Image to Structured 3D Models in One Click—The Next Milestone in AI Generation?

Imagine giving an AI a simple 2D photo, and it instantly produces a detailed, editable, and decomposable 3D model. Sounds like science fiction? The newly released AI model PartCrafter is making it a reality. What exactly is this cutting-edge technology, and how might it revolutionize the workflows of 3D artists and game developers?

Have you ever dreamed of turning a photo of a chair or a cool car into a 3D model ready for games or animations? In the past, this required 3D artists to spend hours or even days painstakingly sculpting, modeling, and texturing in specialized software.

Honestly, it’s a grueling process.

But now, a new research project called PartCrafter might be ready to completely transform that workflow. Developed by a leading research team, it has a single goal: to make generating 3D content easier, faster, and—most importantly—smarter.

What is this “magic tech”? Introducing Structured 3D Generation

So, what exactly is PartCrafter? In short, it’s an AI model that can take a regular 2D image (in RGB format) and “magically” create a full 3D mesh in just seconds.

You might be thinking, “Wait, hasn’t this tech been around for a while?”

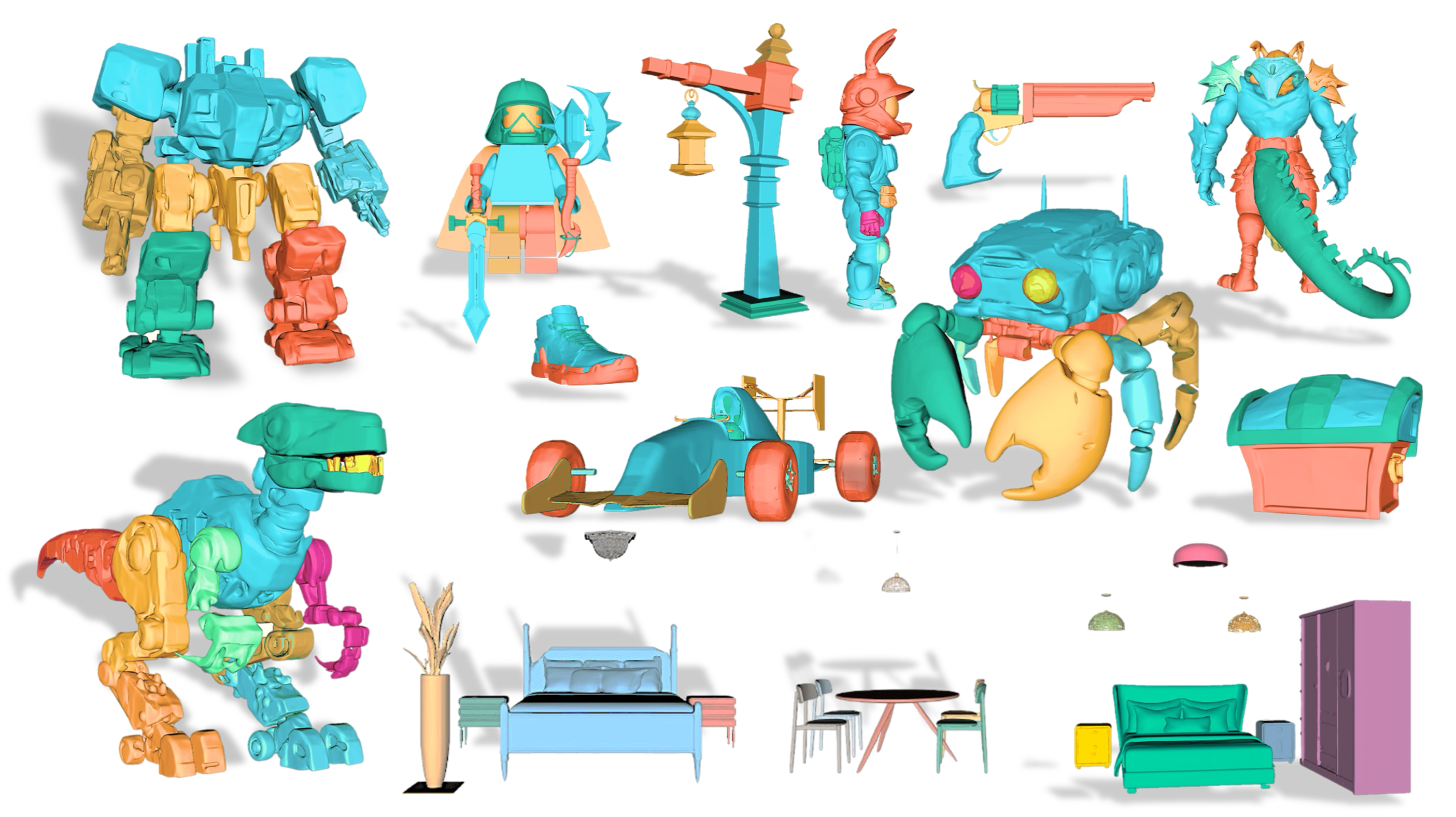

Yes, there are already tools that generate 3D models from images. But what makes PartCrafter special is its focus on being structured and compositional.

Most previous models output a single, monolithic 3D object—like a solid plaster statue. Want to move a chair leg or swap a car wheel? Good luck. You’re stuck with a “dead” model.

PartCrafter is different. It generates structured models.

Think of it like building with LEGO. Instead of giving you a fully built house, it gives you the building blocks—walls, roof, windows—and understands how they fit together. When it creates a chair, it knows the chair has a backrest, a seat, and four legs. These parts are individually editable components.

Even more impressively, it can handle multiple objects and understand the relationships between them. That’s why it’s called a “Compositional Latent Diffusion Transformer.”

What Makes PartCrafter Unique?

How does it compare to other 3D generation tools out there? The key lies in its understanding.

- Single vs. Structured Models: Most tools generate a single, solid mesh. PartCrafter generates a collection of meaningful, independent parts.

- Post-editing Made Easy: Want to tweak the model? With traditional tools, you might have to start over. With PartCrafter, you can easily modify or swap individual parts—like playing with modular toys. This is a game-changer for 3D artists and animators.

- Greater Realism: Because it understands object structure, the resulting models are more physically and logically accurate. You won’t see a chair with only three legs standing upright.

All of this dramatically shortens the gap between AI generation and real-world use—whether in game engines or animation software.

The Tech Behind It: Latent Diffusion Meets Transformer

Sounds magical, right? The core tech behind PartCrafter is a powerful combination of Latent Diffusion Models (LDMs) and Transformers.

Here’s a simple analogy:

- Transformer’s Role: Think of it as a smart “structure analyst.” When it sees an image, it doesn’t just see colors and shapes—it understands, “this is a chair made of a seat and legs.” This is the same tech that powers ChatGPT’s understanding of language context, but here it’s used to grasp the “visual grammar” of objects.

- Latent Diffusion’s Role: This is the “master builder.” In a compressed conceptual space (the latent space), it starts from random noise and, guided by the blueprint from the Transformer, gradually refines the noise into a detailed 3D mesh.

Together, they give PartCrafter both a high-level understanding of structure and the ability to generate fine-grained details.

Why Does This Matter?

PartCrafter’s arrival could significantly impact several industries:

- Game Developers: Rapidly prototype in-game assets within minutes, speeding up world-building processes.

- 3D Artists: Skip the time-consuming base modeling phase and focus on refining and adding creative flair.

- AR/VR Creators: Populate augmented and virtual environments with massive amounts of 3D content faster than ever.

For those interested in the technical side, you can check out their research paper or follow their official project page for updates.

Frequently Asked Questions (FAQ)

Q1: Can I try PartCrafter right now?

Not yet. According to the development team, a demo will be available soon on HuggingFace 🤗, along with the pre-trained model and code. Stay tuned!

Q2: Is the project open source?

Yes! PartCrafter is released under the MIT License, meaning once it’s live, anyone can freely use, modify, and distribute the code and model—a big win for the open-source community.

Q3: How is it different from TripoSR or other 2D-to-3D tools?

The key difference is structure. Most tools generate a single, fused 3D model. PartCrafter creates a “structured” model composed of multiple independent parts. You can manipulate the legs of a chair or the doors of a car separately—this is a revolutionary shift.

Conclusion

PartCrafter isn’t just another tool that turns images into 3D models. It represents a major leap in AI’s ability to understand how the world is built. From mimicking appearances to grasping internal structures, this technology opens up new creative possibilities for the future of 3D content.

Although we can’t use it yet, the concept and early results are exciting enough. Let’s look forward to the official release of PartCrafter!

Acknowledgments: The PartCrafter research team extends special thanks to the creators of TripoSG, HoloPart, and MIDI-3D. Their open-source contributions were a major source of inspiration and support—highlighting the true spirit of the open-source community: learning from one another and growing together.