Make Photos Talk! Alibaba Open-Sources Wan2.2 Model, Generating Videos from a Single Image and Audio

Imagine bringing a still photo to life, making the person in it speak with just a voice recording. This is no longer science fiction. Alibaba’s Wan team has officially open-sourced its latest audio-driven video generation model, Wan2.2-S2V-14B, opening up new possibilities for content creation and digital interaction.

Have you ever wished that the old, dusty photograph of your grandparents at home could come to life, with them personally telling you stories from their past? Or that your digital avatar could deliver a speech in your own voice?

It sounds a bit like magic, but technology is constantly turning magic into reality. Just today, Alibaba’s Wan team dropped a bombshell, officially announcing the open-sourcing of their latest AI video generation model—Wan2.2-S2V-14B.

Simply put, this is a smart tool that can “understand” sound and make pictures “move.” You just need to give it a static image and an audio clip, and it will automatically generate a dynamic video with lip movements, expressions, and head poses perfectly synchronized with the audio.

So, what exactly is Wan2.2-S2V?

Let’s break down the name. S2V stands for “Sound to Video,” which directly points to its core function. The magic of this model lies in its ability to accurately capture the subtle changes in audio—whether it’s the rise and fall of intonation, the rhythm of pauses, or the shape of the mouth during pronunciation—and translate these features into extremely natural facial animations.

This is not just simple “lip-syncing.” The Wan2.2 model comprehensively analyzes the audio to generate videos that include subtle expressions and natural head movements, making the final product look less like a stiff robot and more like a living, breathing person.

Currently, the model can stably generate videos in 480P, and under ideal conditions, it can even reach a resolution of 720P. This is more than enough for applications like social media short videos, online courses, or virtual customer service.

Why is the open-sourcing of this technology so important?

You might be thinking, with so many AI tools already on the market, what’s the big deal about one more Wan2.2?

It’s not that simple. The key lies in the words “open source.”

When a powerful AI model is open-sourced, it means that developers, researchers, and artists all over the world can access its source code for free. It’s like a top chef not only serving a delicious dish but also publishing the exclusive recipe for everyone.

This will have several huge impacts:

- Accelerating Innovation: Countless developers can modify, optimize, or integrate Wan2.2 into their own applications, giving rise to creative uses we can hardly imagine right now.

- Lowering the Barrier to Entry: In the past, similar technologies were often in the hands of a few large companies, with high development costs. Open source allows small teams and even individual creators to use cutting-edge AI video generation technology.

- Promoting Community Development: An active open-source community can continuously identify problems, contribute code, and share experiences, making the model itself stronger and the ecosystem more prosperous.

This is not just a cool toy; it’s more like a creative cornerstone, ready for everyone to build their own fantastic ideas upon.

Want to try it yourself? It’s not difficult at all!

After all this talk, it’s better to experience it for yourself. The Wan team has thoughtfully provided multiple channels, so whether you’re a tech novice or a professional developer, you can easily get started.

For a quick experience for everyone:

The easiest way is to go directly to their demo space on Hugging Face.

You don’t need to install any software or write a single line of code. Just upload a clear face photo on the webpage, then upload or record an audio clip, click the “generate” button, and after a short wait, you’ll see your own dynamic video. This is perfect for creating fun social media content or surprising a friend.

For developers and researchers:

If you want to delve into the working principles of the model or integrate it into your own projects, then the GitHub repository is your treasure trove.

Here, you can find the complete source code, model weights, and detailed deployment guides. For geeks who are eager to explore the boundaries of AI technology, this is undoubtedly the best gift.

In addition, the team has also provided a detailed official blog post and technical paper for academic researchers to delve into the algorithms and architecture behind it.

Future Imagination: Where can this technology be used?

The potential of Wan2.2-S2V goes far beyond making funny short videos. Its application scenarios cover almost all areas that require “human-computer interaction” and “content generation.”

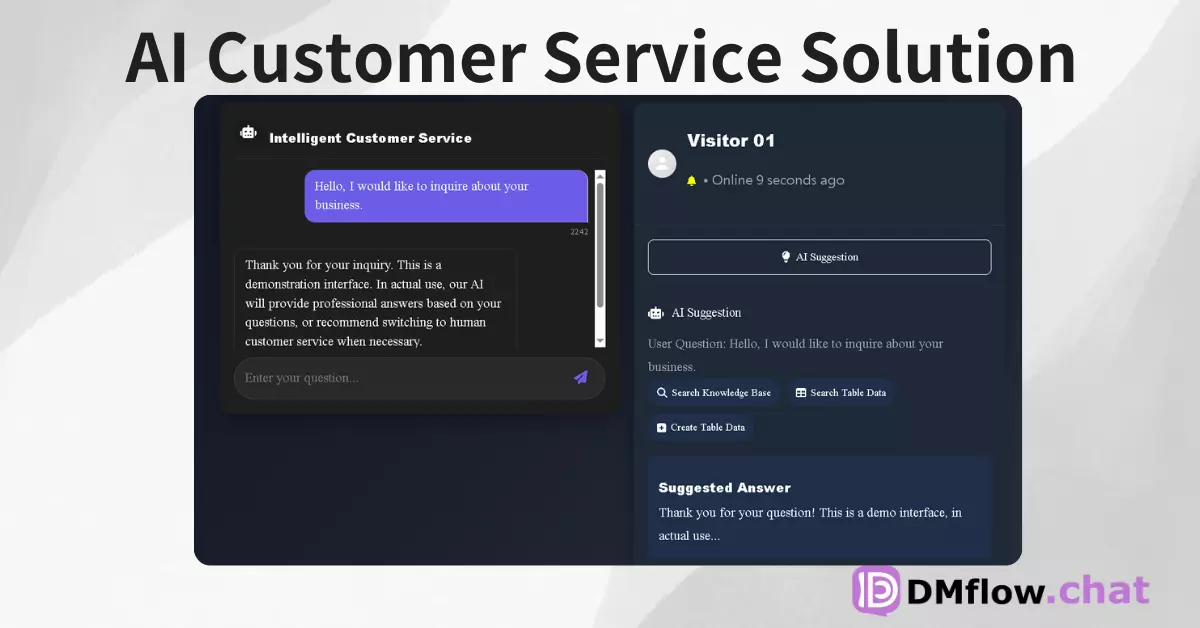

- Digital Humans and Virtual Customer Service: Companies can create tireless, 24/7 virtual customer service agents that answer customer questions with a friendly and natural image.

- Education and Training: Transform boring text-based teaching materials into video courses personally explained by historical figures or professional lecturers, greatly enhancing the learning experience.

- Content Creation Automation: Bloggers or news media can quickly convert articles into news videos broadcast by virtual anchors, significantly improving content production efficiency.

- Personalized Entertainment: Perhaps in the future, you can have any picture—whether it’s your idol, an anime character, or your cat at home—read a book to you or sing you a happy birthday song.

The emergence of this technology is blurring the lines between reality and virtuality. It makes us rethink the ways we “communicate” and “express.” When any static face can be given a voice and emotion, a new world full of infinite creativity is opening its doors to us.

Are you ready to make your photos talk?